Information and the History of Philosophy: Introduction

This article is part of the volume Information and the History of Philosophy.

At the start of the pandemic of 2020, facial recognition systems were momentarily thrown into disarray. How to identify faces, if everyone venturing outside covers mouths and noses with virus-repellent cloth?1

That these machine learning systems could soon make do with simply people’s eyes and facial shape is, to me, telling, not just because of the nauseating extent of continued on- and offline surveillance it reveals.2 It is curious, equally, because it illuminates how even the tiniest segments of our bodies can function as living, walking repositories of information. Not just our bodies: information extends from fossils to footprints, from DNA to demographics, from barometer readings to behavioural profiles, from statistical regularities to true randomness. Information is many things, information is all around us, information reaches into any and all aspects of people’s lives. Furthermore, once extracted, information can be stored, manipulated, transformed, analysed and transmitted to whomever or whatever stands in the right chain of communication (as well as being able to be lost or destroyed).

Information’s omnipresence and adaptability signal its significance: ethically, individually, socially, economically and politically. Yet, perhaps hiding behind covers of novel terminology (“information” only introduced itself to the English language in the fifteenth century), within philosophical histories it still craves sustained study.

This volume seeks to offer its readers such philosophico-historical reflection, in the guise of a set of individual, self-contained “confrontations” with information throughout history. Bundled into five sections, organised broadly chronologically, it contains 19 individual studies which grab a text, idea, or movement in the history of (thinking about) information and lay out its ethical, epistemic, socio-political and environmental significance—holding them up, drawing closer to the light for maximum scrutiny, even where that occasionally gets uncomfortable.

Natures

Already well before the Common Era, we find philosophers theorise about the extent to which information is pervasive, manifest throughout the natural and social world. In ‘Yinyang information: Order, know-how, and a relation-based paradigm”, Robin R. Wang focuses on information in Classical Chinese philosophy, more specifically in yinyang thinking: that is, thinking attuned to yinyang.

In yinyang thinking, yin (陰) and yang (陽) are the ultimate opposing, interdependent, interacting, complementary principles that structure an ever-changing dynamic reality. This much is evident in the change of seasons, operations of natural elements, as well as in the relationships among all entities. It also governs human affairs, such as social relations, correct governance and how to maintain a healthy body. Order, in yinyang thinking, is emergent rather than predetermined, may constantly change, leaving an uncertain, not fully predictable, future.

Drawing on classical texts including the Shijing (first millennium BCE), and works from the Warring States period (476–221 BCE) such as the Zhuangzi, the Mohist canon, the Mengzi and the Daodejing, Wang demonstrates that information in yinyang thinking is understood to capture the yinyang dynamic order. In contrast with practices of divination or magic, such information-guided thinking rationalises: it attempts to manage uncertainty informationally, by a search for patterns and harmony.

An expansive vision of nature being informationally structured as found in ancient China resonates, moreover, with a number of philosophical movements in the Mediterranean in the final centuries BCE.

Plato of Athens, for example, turns to information in the individual, or as shared between people, with their focus on the basic act of informing, as Tamsin de Waal shows in “Plato on the act of informing: Meaningful speech and education”. If informing concerns the communication of a message from a sender to a receiver, what sources (if any) can information spring from? What sorts of information are there? Can information be stored, remembered? De Waal demonstrates that precisely Plato’s focus on informing as joint inquiry reverberates throughout his wider views of information.

Interestingly, De Waal points out that Plato was particularly critical of one form of information storage: writing. When in his Phaedrus dialogue, the figure of Socrates reports an African philosophical story of the Egyptian god Theuth boasting about their invention of “letters”, a.k.a. writing, their interlocutor Thamus counters that writing merely “produce[s] forgetfulness” in people:

Their trust in writing, produced by external characters which are no part of themselves, will discourage the use of their own memory within them. You have invented an elixir not of memory, but of reminding. (Phaedrus, 275a)

Practices of recording textual or numerical information had at this point, of course, already been around for millennia: from the Ishango bone calendars found in what today is the Democratic Republic of Congo dating back circa 20,000 years, to Sumerian cuneiform tablets (ca. 3200 BCE) to the Inca Quipu system of accounting found in the Andes region of South America around 1500 BCE.

Yet during much of history, orality would have been the dominant mode of information transfer. Be that either exclusively oral (as with Native North American and Australian Aboriginal intellectual traditions) or by using oral transmission paired with script (as was the case for early Buddhist, Vedic and Jain works, ancient texts of Judaism and the Catholic tradition). (Moreover, literacy and numeracy long had only limited distribution, remaining the preserve of a ruling elite, often tied to socio-economic and political power.)

Where writing crafts a semi-persistent record, detachable from its producer, oral information transfer requires sender and receiver to be near another and, if immediate perishing of the message is to be avoided, some form of mnemonic and vocal reproductive capacities in the receiver, facilitating a chain of hearer-speakers in an oral tradition.

Thamus (as reported by Socrates, as written by Plato) reminds us that one’s choice of information vehicle is not neutral, but can have wide repercussions for its users, both cognitively and socially. (Something to which many living in attention-deprived, memory-externalising societies today can attest.)

Our very nature as cognitive and sensory information-processing animals fascinated Aristotle of Stagira, as Miira Tuominen makes clear in “On information in Aristotle: Nature, perception, knowledge”.

Tuominen shows that in Aristotle’s philosophy, information is everywhere. In nature, Aristotle sees a form of non-semantic, teleological information, as they regard any being as structured (that is, as being determined and regulated) by immaterial principles called “forms”, which inform matter. A wren, then, is structured by the form of wren-ness.

Those forms, though, may equally be transmitted, so as to inform knowers, which is where Aristotle’s theory of perception comes in. If I perceive a wren, the form in nature that structures the wren’s matter can come to be transmitted from the wren to myself as semantic (because meaningful) but non-linguistic information: I grasp the wren sensorily.

Tuominen points out that, epistemically, while Aristotle hence finds perception foundational for obtaining information, its role nonetheless remains limited: to get a cognising being anywhere near certainty or universal principles—in short, near real knowledge—further reasoning and dialectical assessment would be required. Information, we will learn time and again, does not equate to knowledge.

The view of information as operating in and through the natural world would, during these centuries, also begin to characterise nascent medical professions, as becomes clear from Chiara Thumiger’s longue durée study of the presumed psychiatric condition of phrenitis (roughly, “inflammation of the mind”).

In “Information and history of psychiatry: The case of the disease phrenitis”, Thumiger shows how medical information in the guise of diagnostic criteria, symptoms, labels, definitions and classifications shape phrenitis as an “object of information” across centuries of psychiatric cultural transmission.

The backdrop of Thumiger’s study is formed by broader attempts to organise information, for instance in the gathering of huge library collections (think of the Assyrian clay tablet library of Ashurbanipal in the seventh century BCE, or the library of Alexandria in Egypt circa four centuries later), as well as the development of various medical corpora, such as the body of works associated with physician Hippocrates from the island of Kos in the Aegean, and in traditional Chinese medicine, the Han Dynasty text The Yellow Emperor’s Inner Canon, both dating from around the latest centuries BCE. Collections such as these aided not just the persistence and transfer of (medical) information but also, given their availability, its access.

Access

Does how one accesses information matter, epistemically? Hindu philosophical traditions during the first millennium and onward distinguish between texts that were “heard” (śruti in Sanskrit) and those “remembered” (smṛti). Significantly, the body of “heard” texts (originally transmitted orally), which often were oldest and without a known author, would count as the more authoritative, while the authored, written-down ones descended somewhat in the hierarchy of trust and reliability.

A key scholar in the Nyāya school of Hindu philosophy who did contribute authored texts was the great ninth-century philosopher Vācaspati Miśra. In “Vācaspati on aboutness and decomposition”, Nilanjan Das confronts Vācaspati’s work on information channels, in particular their stance on how cognitive information relates to the worldly objects that it is of.

Das shows that Vācaspati argues against “decompositionalism”, that is, against the idea that what my cognitive (and in particular: perceptual) experience is about can “decompose” into several components (such as, say, a picture in my head, and a resemblance between that picture and a worldly object). Instead, Vācaspati holds that information I gain through perception is naturally and automatically (without further conditions) about mind-independent particulars. The informativeness of perception is not due to further inference, but already lies within a successful act of perceiving itself.

That Vācaspati, in addition to producing self-originated work, also wrote abundant commentaries on key texts of Hindu philosophy, helped solidify this philosophical tradition around the turn of the first millennium.

Roughly in this era, another monumental undertaking in the trans-linguistic and cross-cultural safeguarding of information was taking place in and around Baghdad at the height of the ʿAbbāsīd Caliphate (750–1258). A massive-scale initiative of translating (mostly scientific) works from languages including Sanskrit, Syriac, and Greek into Arabic helped make these sources accessible to readers of that language. (Translation by itself, too, brings with it a host of philosophical questions: What is it to accurately carry information across to another language? Can translation ever be lossless, exact? What about the ethics of (not) translating, (not) making accessible?)

When Anna Ayse Akasoy investigates the circulation of information around the figure of Alexander of Macedon in the stunningly rich narrative records of the Arabian Nights stories, it is against this backdrop of such freshly accessible Greek sources in the Arabic language. More precisely, Akasoy’s “Seeing and recognition in the Arabian Nights and Islamic Alexander legends” zooms in on the reliability of information channels represented in this work of philosophical literature. Is oral information more trustworthy than written words? How about sensory modalities, can I rely on hearing over sight?

Akasoy demonstrates that in these stories, the primary and most reliable, trustworthy, mode of informing is deemed to be oral (or verbal), rather than visual. Moreover, the literature foregrounds how information, when transferred, is never simply “absorbed” as is, but that its successful transmission always requires contributions from both sender and recipient in a context of cooperation.

(Incidentally, Akasoy also shows how memes—from the Greek ēmimēma, “that is imitated”—or the spread of cultural information through copying was not a 1970s invention, nor a product of GIFs on the Internet, but very much already alive in the figure of “Alexander-as-meme” in the Arabian Nights stories.)

Not a meme, but nonetheless similarly concerned with sensory-cognitive information processing, is the work of Abū-ʿAlī al-Ḥusayn ibn-ʿAbdallāh Ibn Sīnā.

As Luis Xavier López-Farjeat demonstrates in “Avicenna on information processing and abstraction”, Ibn Sīnā (or “Avicenna” to his Latin-speaking friends) was particularly concerned with the acquisition of mental content (ma’nā in Arabic), or the cognitive access of information. In agreement with Aristotle in the latter’s work On the Soul, to which they had access courtesy of the translation-into-Arabic enterprise, Ibn Sīnā holds that the human mind can process information from the external world by apprehending forms.

The question is: How? When I spot a wren, can I myself obtain its form for cognitive grasping? Or do I need external assistance to achieve such cognitive information?

A key notion for Ibn Sīnā here, as López-Farjeat shows, is that forms might be extracted from perceptible objects by abstraction. Precisely this insight links Ibn Sīnā’s theory of perception directly to contemporary discussions of perceptual epistemology, about intentional states and the exact informational contents we can gain from the senses, and which require further (cognitive or extra-cognitive) processing.

Compared to Ibn Sīnā, Tommaso d’Aquino (or “Thomas Aquinas”), writing in the thirteenth century, estimates the human capacity to process worldly information rather optimistically. As Cecilia Trifogli shows in “Thomas Aquinas on cognition as information”, this philosopher too had been inspired by Aristotle’s work on information extraction and processing in cognition, understood as the taking in or receiving of the form of a thing. Cognising, on this line, is taking on a form in addition to one’s own.

Yet what is it to take on such additional forms? What makes it that the form of the wren I grasp through perception is in fact of that little bird on the branch?

Key for d’Aquino here, as Trifogli flags, is the notion of intentional information, or the idea that the form one might grasp is “like”, or “agrees in nature” with the object in the world that it is of. Hence d’Aquino does not take it to be basic or inexplicable that the information gained through perception is about a particular mind-independent object, but points to conditions that must obtain. (What Vācaspati might call out as “compositionalism”.)

Control

Moving beyond 1500, various tendencies that had already been bubbling below the surface began to gain shape more clearly. For instance, where information-storing manuscripts had enjoyed healthy circulation and copying in previous eras, the development of methods of mechanical reproduction—initially with woodblock printing (developed in Han Dynasty China before 220 CE, with uptake in subsequent centuries in India, North Africa, the Middle East, and adopted in Europe only from around 1300), next with the more flexible process of printing with movable type, developed around 1040 by Bì Shēng (990–1051) (echoed by Johannes Gutenberg’s work some centuries later)—facilitated growing mass production of information-holding documents.

Moreover, further tools for processing and organising information had taken shape, such as in encyclopedias, bibliographies and indexes to list summarised information. Tenth-century bibliographer Abū al-Faraj Muḥammad ibn Isḥāq al-Nadīm offered a compendium of all current knowledge in their Kitāb al-Fihrist (Book Catalogue); the Yǒnglè Encyclopedia (1403–1408) commissioned by the Yǒnglè Emperor (Zhu Di) in Ming Dynasty China gathered all available book knowledge into over 20,000 manuscript rolls; and in Conrad Gessner took the inventive step to add a thematic subject index to their “universal” list of all books, Bibliotheca universalis (1545–1549). In 1751 Denis Diderot and Jean-Baptiste le Rond d’Alembert began to publish their Encyclopedia, “to collect knowledge disseminated around the globe”.

Analogue computers were another appealing tool for information processing. Emerging as early as the first century BCE in the Aegean in the hand-powered Antikythera mechanism (used to predict astronomical positions), computing devices began to proliferate in the seventeenth century. We have, for example, John Napier of Merchiston’s calculator (called “Napier’s bones”, published 1617) and Blaise Pascal’s arithmetic machine, designed 1642.

A figure not to be missed here is Gottfried Wilhelm Leibniz, who—–inspired by hexagrams of the classical I Ching (Yìjīng) text—–not only studied binary arithmetic, which would later form the foundation of electronic computing, but also invented a “stepped reckoner” (1673) for mechanical calculation.

In the trans-historical paper ‘Leibniz as a precursor to Chaitin’s Algorithmic Information Theory’, Richard T.W. Arthur considers Leibniz’s work on information. Arthur shows some of the surprising ways in which Leibniz anticipated key components of the idea of digital information associated with twentieth-century Algorithmic Information Theory, as developed by Gregory Chaitin, including the idea of a computer program and an information-theoretic notion of complexity.

Significantly, Arthur also demonstrates that for Leibniz, information cannot ever be understood as disembodied, free-floating but—in an anti-idealist gesture—demands a physical basis. Information transfer requires energy; information must be materially embodied.

Vehicles and embodiment of information are equally at stake in my own chapter, “Information visualisation in the Philosophical Transactions”, which focuses on approaches to providing information in visualised (not primarily text- or number-based) form, in one of the first scientific journals in Europe, the Royal Society of London’s Philosophical Transactions, between 1665 and 1886.

Distinguishing how visualisations in the broad categories of diagrams, maps and graphs function epistemically, I show that in this platform, visualisation functions not to offer a distinct kind of information that could not have been communicated in other form. Rather, visualised information functions to optimise user performance: cumbersome and costly to produce, but it assists readers in efficient information uptake.

Attention to the (social) uptake of information is one way to attempt to regulate or control information flows within societies. Other methods, including censorship or the vetting of information quality before documents get out into the wild, are equally gaining clearer shape in this period. For example, in 1538 Henry VIII requires that any printing of books in England gain pre-publication approval, while from the mid-sixteenth century onward the Tribunal of the Holy Office of the Inquisition—an arm of the Catholic Church—publishes successive indices of banned books to halt the spread of information it deemed ‘heretical’.

Stina Teilmann-Lock, in their “‘Dwindled into Confusion and Nonsense’: Information in a copyright perspective from the Statute of Anne to Google Books”, investigates the role of copyright in the distribution of information. Teilmann-Lock argues that while some emphasise that intellectual property rights obstruct access to information, the motivation behind the introduction of these rights was to stimulate the distribution of accurate, authenticated information. Teilmann-Lock also offers a caution: as the Internet lowers barriers to information sharing without guaranteeing quality or accuracy, we risk collapsing into “confusion and nonsense”.

The social life of information is also at the forefront in Lynn McDonald’s chapter on “Information in the pursuit of social reform”. Focusing on British and French-speaking authors in the seventeenth to early twentieth century, McDonald shows how a number of them strategically employed emerging empiricist scientific methods in gathering real-world data, and put that information to use—extracted, analysing and disseminating—to effect social and political reform.

McDonald pays specific attention to the work of two key authors: political scientist Anne Louise Germaine de Staël-Holstein (1766–1817), who had advocated precise, “geometric” science in support of political and human rights, and statistician Florence Nightingale (1820– 1910). Having received significant spurs from Abu Yūsuf Yaʻqūb ibn ʼIsḥāq al-Ṣabbāḥ al-Kindī’s (801–873) work on frequency analysis, the development of mathematical probability theory in the seventeenth century, and the eighteenth-century state-driven collection of demographic and economic data (hence the “stat-“ prefix), by the nineteenth century, work in statistics and statistical information was taking a new shape. Nightingale, in addition to pioneering new forms of information visualisation through statistical graphing, was foundational in modelling how statistical information could be used to great effect in health care advocacy.

In meta-level assessment, McDonald also points out the risk of misinformation when towering scientists such as de Staël and Nightingale are ignored (as they have been) in sexist information regimes and efforts of canon formation. A risk which, as will become clear, only looms larger in successive periods.

(Dangerous) Systems

The philosophical life of information in the nineteenth century is marked by a pattern of increased systematisation, automation and organisation of information at scale.

In few places is such systematisation of information processes more evident than in the information needs and channels used to shape and maintain “empire”, as Edward Beasley shows for the case of Victorian Britain in their chapter “The nineteenth-century information revolution and world peace”.

Early Victorian culture saw two critical (technological) changes which sped up information flows: an efficient postal service, and near-instant telecommunication. An optimism only paralleled by the early days of the Internet took hold. Rapid information flows hold a promise of improving people’s lives and livelihoods, of letting societies flourish; a process culminating in world peace.

Yet, as Beasley shows for Britain, such information orders did not only assist British-controlled imperialism, but also (to their alarm) empowered those who contrariwise sought to rise up against the coloniser, as was the case for people in India.

A like enthusiasm about the potency of information can be found in the work of philosopher Charles Babbage—known also for developing a “Difference Engine” (a calculator in the spirit of the Antikythera mechanism and seventeenth-century calculating machines, started in 1821), and for conceiving a more general-purpose computer called the “Analytical Engine” (conceived 1834) in collaboration with mathematician and programmer Augusta Ada King, Countess of Lovelace (1815–1852).

In “Charles Babbage’s economy of knowledge”, Renee Prendergast shows how Babbage’s enthusiasm for information machines was sparked at least in part by their potential economic benefits, combined with the view that information and knowledge should bear fruit for transforming the conditions of people’s lives.

Prendergast makes clear that—in a Baconian, empiricist spirit—Babbage saw good use for the gathering of accurate, experimental data and for inductively building upon such information. Operating with absent or imperfect (or worse, erroneous) information could risk rising costs in production and transactions, Babbage noted. Making calculation mechanical, with the appropriate investments in capital and machinery, could economise on the information and skill needed to perform a task, and so help reduce costs and eliminate error. Yet it could also result in replacing people altogether. Information quality and its operation can have direct, real-world economic and social consequences.

The nineteenth-century drive to systematise information made itself felt not just socially, but also in the move to organise, name and control nature. With the Swedish physician Carl Linnaeus’ System of Nature (1735), which had introduced its author’s system of biological classification, hovering in the background, a crucial contribution on this front came from the work of Gregor Mendel (1822–1884). Mendel’s experiments with pea plants in the mid-nineteenth century subsequently placed them as a founding figure of the discipline of genetics.

In “Mendel on developmental information”, Yafeng Shan investigates Mendel’s contributions to ideas about biological information, in particular concerning development. What, exactly, did Mendel understand by “development” in relation to biological organisms, and how does information figure in his assessments? Particularly and provocatively, Shan shows that the famous Mendelian laws of developmental information are not, in fact, about heredity; that is, about the genetically passing on of physical or mental traits from one generation to another. Heredity was not what Mendel studied or was concerned with. Rather it was the development of hybrids in progeny.

Where Mendel did not seek to tread, the Victorian-era statistician Francis Galton went. Like Nightingale, Galton made significant strides in mathematical statistics in analysis, developing the basis of the contemporary weather chart (in Meteorographica, 1863) and conceiving of the notion of a standard deviation to quantify variation in a normal distribution.

Incidentally, as Debbie Challis and Subhadra Das discuss in “Information and eugenics: Francis Galton”, Galton was also an advocate of the racist wing of pseudo-science known as “eugenics”. These two points are not coincidental. As Das and Challis show, information gathering was key to Galton’s development of eugenicist ideas.

During a period that involved significant imperial self-positioning, Galton jumped on that part of biology that Mendel did not concern themselves with, namely: heredity. Galton refined available methods for gathering and analysing information about people through statistical modelling, and did so at least in part in the process of seeking to mathematise biology and study how “intelligence” might be inherited.

From their historical vantage point of describing Galton’s ventures into eugenics, Challis and Das are moreover able to issue another caution. They argue that, instead of assuming that information collection and analysis can and will always be neutral, even mathematical operations can be discriminatory. (A point reinforced in recent publications such as Cathy O’Neil’s Weapons of Math Destruction of 2016, and Safiya Noble’s Algorithms of Oppression from 2018.) More strongly, Das and Challis show that not only does information gathering for eugenic purposes still occur, it is even prevalent today.

Insurgencies

In the year 1900, few could have foretold the exact manner in which the mathematised and increasingly automated use of information in science, industry, and societies at large would impact individuals, their communities, and the planet. Though the contours, we should say, were already becoming gradually clearer.

One person who helped shape the scientific gathering, use and analysis of information for the social domain—co-building the emerging field of sociology—was William Edward Burghardt Du Bois (1868–1963) in the United States.

In “The racialization of information: W.E.B. Du Bois, early intersectionality, and social information”, Reiland Rabaka pulls into focus two core points. One, the groundbreaking work carried out by Du Bois for sociological information. Du Bois was at the forefront of studying the “uniquely and unequivocally” American issues of race and anti-Black racism, segregation, colonisation and racial oppression, being one of the first scholars to investigate how these manifested themselves at the intersection with social class, gender and sexuality, religion, education and economics. Methodologically, Du Bois extended existing inductive approaches, including the use of local community surveys and interdisciplinary studies, and enriched sociology with political economy, history, anthropology and “archaeologies” of social phenomena (an innovation later often credited to a prominent theorist in France).

Second—–resonating with McDonald’s attention to the exclusion of authors from political science and statistics—–Rabaka also, historiographically, flags the artificial exclusion of Du Bois from the history of social information. Instead of being acknowledged as a groundbreaking sociologist, Du Bois is all too often ignored altogether, or only gets credit in one or more of sociology’s sub-disciplines. Such continued exclusion, such “epistemic apartheid” in United States sociology, Rabaka makes clear, raises fundamental questions about what results if information ecosystems get artificially and detrimentally separated.

The twentieth century was also the point at which information itself—its nature and characteristics—became the subject of sustained philosophical moves and reflections, as stands out in the final two chapters of the volume.

In “The many faces of Shannon information”, Olimpia Lombardi and Cristian López investigate contributions to the study of information by mathematician and engineer Claude Shannon (1916–2001), whose work on mathematical communication has reshaped the field of communication technology.

Placing itself amid a hoard of different interpretations and connotations of what “information” really is, López and Lombardi focus specifically on how “Shannon information” must be understood. Information could, among other things, be conceived as something epistemic, to do with human (or animal) cognitive abilities; it could be a physical magnitude; or, as within the biological sciences, concern whatever is contained in a DNA sequence. (Moreover, they note that Shannon information has relevant links to the concept of algorithmic information which, via Chaitin, we can trace back to Leibniz.)

Perhaps surprisingly, Lombardi and López demonstrate that we would do well to set aside the assumption that information as Shannon saw it is a single thing. Instead, they show that an information pluralism must be correct here. There are, in different fields, various different, equally valid but mutually exclusive, interpretations of this single formal concept: information.

In the final chapter, “Computers and system(s) science—the kingpins of modern technology: Lotfi Zadeh’s glimpses into the future of the information revolution”, Rudolf Seising lays out one of recent history’s visions of information futures as embodied in the work of Lotfi Zadeh (1921–2017).

A proponent of system theory—that is, the study of both natural and artificial (living, intelligent) systems—Zadeh emphasised the need for such study to be able to mathematically accommodate imprecise, uncertain or even vague information. To this end, Seising shows, one of Zadeh’s key contributions lay in developing a theory of “fuzzy” sets, where membership of elements in a set may be gradual (rather than assessed in binary terms of either belonging or not belonging, as would be the case in classical set theory). Only such more fuzzy mathematics would suit to understand complex systems.

In addition to their technical work, already from the 1960s onward Zadeh championed the use of digital computers for use in education, research and libraries. Zadeh’s continued advocacy shows the need for information visions to continually remain alert to how the ever-growing role of information all around us can (technologically) be charted.

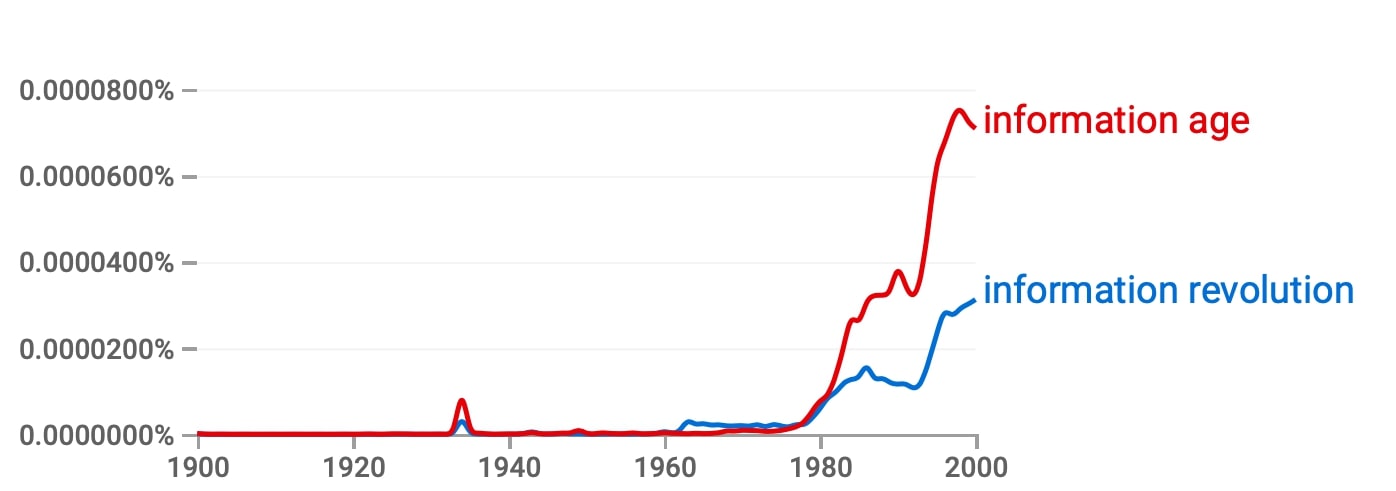

During the march of twentieth and nascent twenty-first centuries, a sense grew that humanity was approaching a new era; an “information age”, accompanied by its own “information revolution” (see Figure 0.1).3

Over recent decades, the world’s total capacity to store information increased more than hundred-fold, from 2.6 optimally compressed exabytes (an exabyte equalling 1018 bytes) in 1986, to 295 optimally compressed exabytes in 2007. The energy used by data centres running 24/7 to facilitate such lucrative tasks as hosting mailboxes, mining cryptocurrency and keeping everyone’s personal pics stored securely in “The Cloud”, is having a drastic environmental impact.4 Some physicists have even speculated that the fundamental “building blocks” (so to say) of the universe might itself be information? (Something poet-physicist John Wheeler coined as “It from bit”.)

Figure 0.1 Results for queries “information age” and “information revolution” in the Google Books corpus English (2019), period 1900–2000, case insensitive, with a smoothing of 0.

Information, or data in its raw form, has (in a not-so-environmentally friendly analogy) been called the “new oil”, signalling its status as a commodity around which to spawn profitable capitalist industries.5 Everything—from location, page clicks, spending patterns, busyness, transport, desires, fears, experiences—is monitored, tracked and monetised (as Shoshana Zuboff details in The Age of Surveillance Capitalism, 2019). All this happens with a degree of centralisation by a few monopolising players, such that a diagnosis of “information imperialism” would not be amiss.

The upside, if there is one, is that in recent years there has been a growing critical awareness of the extent to which we are impacted by this explosion of information generation and use. A growing attentiveness, not just to the risks of misinformation, of epistemic exclusion, of echo chambers, of speech bubbles, of locking scientific information behind paywalls, but also, with Egyptian philosopher-god Thamus (via Plato), to the extent to which any of these informational operations are not neutral, but can actually impact on our psyches, on who we are, on our societies, lands, and by extension, our earth. We offer this volume to stimulate and solidify that rise in thinking about information both philosophically and historically.

-

Ngan, Mei, Patrick Grother, and Kayee Hanaoka. 2020. “Ongoing Face Recognition Vendor Test (FRVT) Part 6A: Face Recognition Accuracy with Masks Using Pre-COVID-19 Algorithms”. NIST IR 8311. Gaithersburg, MD: National Institute of Standards and Technology. https://doi.org/10.6028/NIST.IR.8311. ↩︎

-

Wang, Zhongyuan, Guangcheng Wang, Baojin Huang, Zhangyang Xiong, Qi Hong, Hao Wu, Peng Yi, et al. 2020. “Masked Face Recognition Dataset and Application”. ArXiv:2003.09093 [Cs], March. http://arxiv.org/abs/2003.09093. ↩︎

-

Michel, Jean-Baptiste, Yuan Kui Shen, Aviva Presser Aiden, Adrian Veres, Matthew K. Gray, The Google Books Team, Joseph P. Pickett, Dale Hoiberg, Dan Clancy, Peter Norvig, Jon Orwant, Steven Pinker, Martin A. Nowak, and Erez Lieberman Aiden. 2010. “Quantitative Analysis of Culture Using Millions of Digitized Books”. Science. https://doi.org/10.1126/science.1199644. ↩︎

-

Whitehead, Beth, Deborah Andrews, Amip Shah, and Graeme Maidment. 2014. “Assessing the Environmental Impact of Data Centres Part 1: Background, Energy Use and Metrics”. Building and Environment 82 (December): 151–9. https://doi.org/10.1016/j.buildenv.2014.08.021. ↩︎

-

The Economist. 2017. “The World’s Most Valuable Resource Is No Longer Oil, but Data”. 6 May 2017. ↩︎